- UX for AI

- Posts

- When Engineers Say 'It's Beyond Your Understanding,' Here's What They Actually Mean

When Engineers Say 'It's Beyond Your Understanding,' Here's What They Actually Mean

One engineering leader said this to me on a project 9 months behind schedule. After 34 AI projects, I knew what it actually meant: the problem wasn't the engineers—it was a broken requirements process no one had caught yet.

"This is beyond your understanding."

The engineering lead said it with absolute confidence.

We were two weeks into my AI capability assessment — or as leadership privately called it, “why the hell do we have nothing to ship after nine months?”

I’d been brought in as an independent consultant. One goal: figure out what the real problem was and get the team unstuck.

The dismissal didn’t surprise me. I’ve heard versions of it across 34 AI projects since 2009.

Lots of things are beyond my understanding:

Gluons.

The popularity of watching golf on TV.

PowerPoint being mistaken for progress.

But when a team stalls for months on a simple use case?

That’s not complexity.

That’s lack of clarity.

And when engineering jargon shows up as a substitute for shipping progress, it’s almost always a distress signal.

The Kaleidoscope Trap (The Universal Pattern)

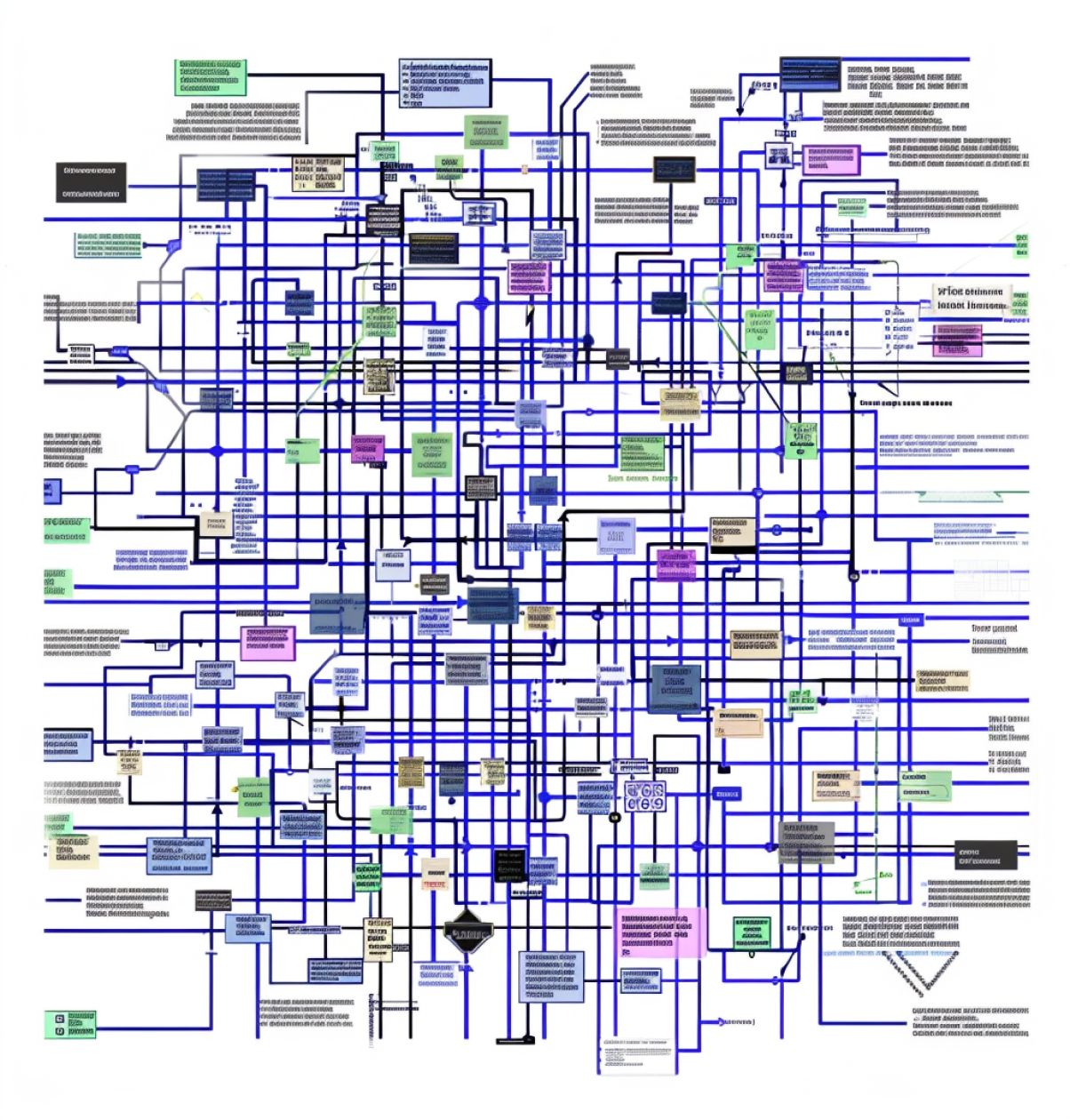

In generative AI, brilliant teams often fall into problems that look solvable — almost solvable — just one more iteration away.

But the puzzle never converges.

Rahul Vohra, CEO of Superhuman, explained it bluntly:

“SaaS used to be ‘move fast and ship.’ With generative AI it’s become, ‘We’re still not sure — let’s wait six more months.’”

This team was trapped in exactly that pattern.

The Netflix Profile Trap (The Real Case Study)

Here’s what they were actually building:

When a user asked a question, the AI would infer which of thousands of categories the user “probably wanted,” then answer using that category’s data.

It sounded sophisticated.

It was actually impossible.

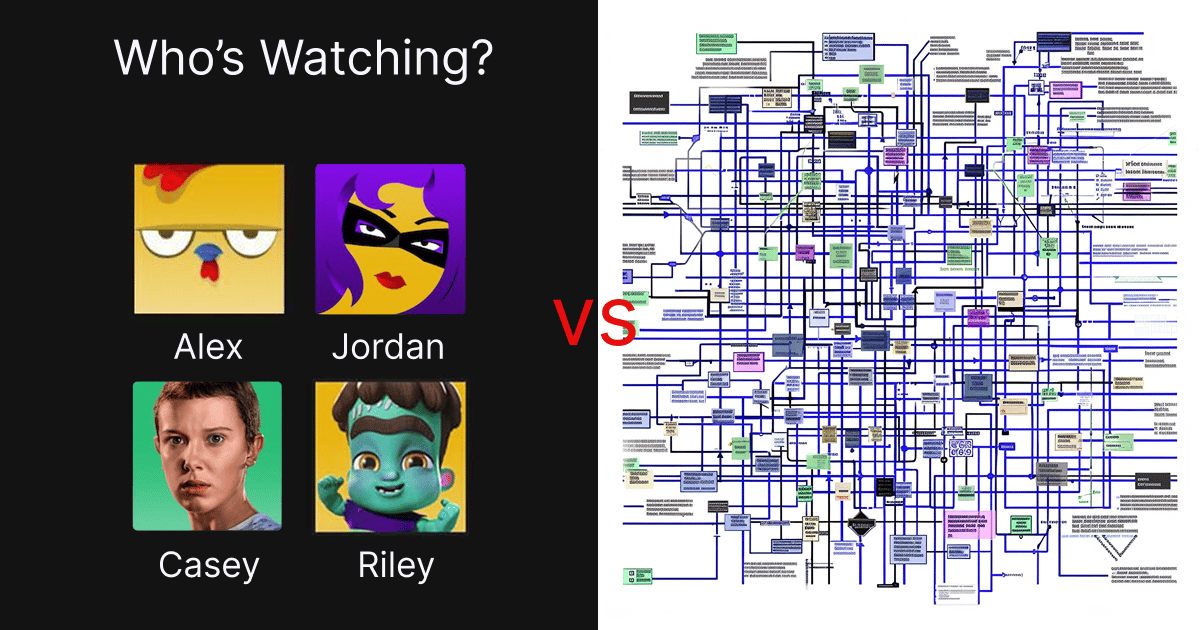

I call this the Netflix Profile Trap:

Imagine Netflix trying to infer whether you want the Kids profile or the Adult profile based only on your search query.

“Action thriller” — is that for you? Your teen?

“Anime” — adult content or children’s content?

Get it wrong, and trust is gone.

That’s why Netflix doesn’t infer your profile.

They ask you to select it explicitly:

Zero AI. Solves the right problem.

This team was doing the opposite — forcing AI to guess the user’s context from scratch, across thousands of options, with no training data.

Wrong layer. Wrong problem. Wrong architecture.

$2M + 9 months: failing to use AI to solve the wrong problem.

A guaranteed failure path disguised as “advanced AI.”

No wonder nine months had passed with nothing to ship.

What Customers Actually Wanted

So we “pointed the telescope at Saturn.”

In one week, talking with just eight customers, we learned:

They didn’t want AI picking categories for them.

They wanted a searchable catalog of categories.

With metadata, recency sorting, and favorites.

Per user, not per org.

Basically: the Netflix profile selection screen — first choose the context, then let AI help.

It was simple.

It was obvious.

And it was the opposite of what the team was building.

If we hadn’t intervened, they could have sunk years into a fundamentally unsolvable problem.

The Cost of Waiting vs. the Cost of Fixing It

This project was:

9+ months behind

On track to burn millions more

Architecturally misaligned with user reality

At risk of being cancelled in a board meeting

Quietly draining the VP Product’s career capital

This is the hidden risk of AI projects that never converge.

They don’t just fail — they take people down with them.

What We Did Instead

We reframed the problem entirely:

Users search and select a category first

AI provides powerful assistance within that context

Everything built at the user level

Ship an MVP in weeks, not months

Learn from usage and expand based on real customer behavior

The team is back on track.

Something real is shipping.

Something customers actually want.

Total cost of intervention: $125K.

A fraction of what continuing down the wrong path would have burned.

Why You Need an Outside Consultant

Your engineering team isn’t trying to waste time.

They’re smart.

They’re committed.

But they’re inside the kaleidoscope — too close to see the pattern.

An outside consultant brings:

Fresh eyes

Pattern recognition from 16 years and 34+ prior AI engagements

The ability to ask uncomfortable questions

An obsession with shipping what customers actually want to buy, not PowerPoints

Internal teams can’t do this for themselves.

They’re too invested in the current approach.

Ask Yourself:

Has your AI project been “in progress” for 6+ months with nothing shipped?

Do status updates sound sophisticated — but never answer “when do customers get this?”

Are engineers solving interesting technical problems instead of user problems?

If yes, you don’t have a technical problem.

You have a clarity problem.

Before the board starts asking the hard questions, get ahead of the curve.

It’s a straightforward conversation about where your project stands, what’s blocking progress, and what it will take to ship something real. No fluff. No junior handoffs.

You’ll walk away with 2–3 concrete next steps — whether or not we work together.

The alternative is letting the kaleidoscope spin for another quarter, then explaining why nothing is live.

Your choice.

Reply