- UX for AI

- Posts

- AI Accuracy is Bullsh*t. Here's what UX must do about it. (Part 1)

AI Accuracy is Bullsh*t. Here's what UX must do about it. (Part 1)

What's the best-kept secret in the Data Science community? AI Accuracy is meaningless in the real world.

IMAGE CREDIT: Midjourney /imagine value matrix split into 4 elements.

What's the best-kept secret in the Data Science community? AI Accuracy is meaningless in the real world. This article gives UX Designers a practical alternative to Accuracy: a practical UX- and Business-centric way to optimize your AI solution to “think” in terms of “human” values.

Are you ready?

The Big Secret

For many years, the data science world operated on data science metrics like Accuracy, Precision, and Recall. Data Science competitions like Kaggle https://www.kaggle.com/competitions determine winners exclusively on a single metric like Accuracy. The big secret is that while Data Science metrics are informative, they actually mean little to real-world applications of AI.

Let’s take a simple use case: car maintenance. Let’s imagine that a fictional car manufacturer, “Pascal Motors,” that makes cars with onboard AI that sends out a special alert whenever a car needs to come in for scheduled maintenance. Let’s say in a year of operation, a typical car has 100 total potential & 20 actual problems. Let’s also assume that the benefit of identifying and preventing a problem successfully is $1,000 (for example, preventative repair: replacing a part before it fails and potentially causes an accident or the cost of the car breaking down in the middle of the journey), and the cost of investigating a potential problem is $100 (as in the cost of a one mechanic checking out the problem for one hour).

Pascal Motors engineers have access to three different AI models: Conservative, Balanced, and Aggressive. These models feature the following Data Science metrics:

TABLE: AI Model Selection Based on Data Science Metrics: Accuracy, Precision, and Recall

Which AI model do you think is the best one?

Most people would pick the Conservative AI because who does not want an AI that is both Accurate and Precise?

How about now?

TABLE: AI Model Selection Based on Real-World Outcomes, Assuming TP of $1000 and TN of $100

If you pick the best AI based solely on the best Data Science metrics, your choice would be wrong.

When we optimize for revenue instead by taking into account the actual cost of negative/positive outcomes, the right answer is actually the Balanced AI (column 2) which produces over 158% more revenue than the Conservative (Accurate) AI.

That’s because:

AIs optimized on the data science metrics alone underperform AIs that take into account the costs and benefits of real-world outcomes.

That’s it. That’s the big secret.

How can Accurate AI be wrong?

At this point, you might be confused – just how exactly can accurate AI be wrong? Isn’t accurate AI the goal? To answer this question, we need to dig into a simple formula of how Accuracy is calculated, but I promise it will be quick – and we’ll make the discussion as simple as we can, so even if you don’t have a lot of pleasant memories of your math classes, please read on, I promise it will be worth it.

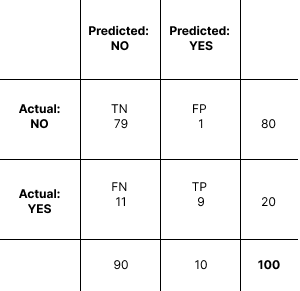

Now to understand what is meant by “Accuracy,” we have to look at a simple table known as a Confusion Matrix. Far from being confusing, Confusion Matrix is actually pretty straightforward: it is simply a table where we collect the count predicted by the model and compare it against the actual outcomes.

Image Source: Fortiche Studios via Midjourney Imagine/ a robot checking a car and thinking hard in the style of the arcane.

Every time Pascal Motors AI looks at 100 potential problems with a car, it can decide whether to send out an alert or not. When the AI decides to send out an alert, that’s a Positive. If the AI decides to ignore a sensor reading, it’s a Negative. If we assume there are 100 potential events in one year, our AI has a total of 100 decision points where it can potentially decide whether to send out an alert or not.

Now our AI does not actually “know” for sure there is a problem with a car. It has to rely on the readings of various sensors, like, say, engine oil impurities, vibration, weird sounds, etc. So sometimes it might guess wrong and send out an alert when it should not have – that is called “False Positive.” False Positive might occur, for example, if a car just sounded weird on a cold morning and our AI decided there was a problem, but mechanics found none.

Conversely, the AI might miss a condition that might be a problem and decide the car is operating properly when it is actually about to have a serious breakdown. In this case, the AI will erroneously NOT send out an alert, creating a “False Negative.”

Thus for every one of the 100 potential decision points or checks in a given year, the AI might come up with four possible outcomes:

True Negative (TN): there is no problem with the car, and AI does not send an alert

False Negative (FN): there is actually a problem, but AI does not tell us

True Positive (TP): there is a problem with a car, and AI sends the alert correctly

False Positive (FP): the car is operating normally, but AI sends out an alert.

Confusion Matrix is simply a count of how many of each outcome a particular model generates. Confusion Matrix is a useful tool because it allows us to see how different AI models perform by the different counts of outcomes they generate.

Simple, no?

Thus, for the Conservative (highly accurate) AI model we looked at above, the Confusion Matrix is as follows:

TABLE: Confusion Matrix for the Conservative AI Model

To read the Confusion Matrix, start by going around the outside of the table. As discussed above, we had a total of 100 measurements and 20 actual YESs, which means 80 actual NOs. Conservative AI sent out 10 alerts and predicted that there was “no problem” 90 times. From the 10 alerts the Conservative AI sent out, it correctly predicted 9 problems (True Positive) and made 1 incorrect alert (False Positive).

To compute accuracy from this table is pretty straightforward: we take the total number of correct predictions and divide that by the number of total predictions and express it as a percent:

Accuracy = Correct Predictions/Total Predictions * 100%

To use a simple example, if you tossed a coin a total of 100 times and predicted “heads” on every coin toss, you would be correct about 50 times, so you’d be 50% accurate, on average.

In the case of Pascal Motors, there were 20 actual problems from 100 total measurements. Thus our Conservative AI made a total of 88 correct predictions (79 True Negative + 9 True Positive) out of a grand total of 100 predictions, so its accuracy is:

Accuracy = (79 + 9)/100 * 100% = 88%

Now 88% is a great accuracy! Unfortunately for us, however, the model missed 11 out of 20 possible problems. In fact, the Conservative AI model is actually less than useless for us: this AI model found less than half the problems!

How does an Accurate AI become so useless? By now, the answer should not surprise you:

AI trained on Accuracy is often too timid: it tries too hard not to be wrong, and so it “leaves money on the table” by not taking enough chances to send out an alert.

Conversely, on the other extreme,

AI that is trained on Recall tries to account for every possible positive – which is often too aggressive for real-world use.

For instance, in an effort to locate 19 out of 20 problems, our Aggressive AI model sent out 80 alerts!

The best AI choice for a real-world application is actually Balanced AI: although it does not excel in any particular Data Science metric, it produces the highest ROI, $15,000 – which is more than 158% higher than the Accurate model.

And in the real world, the ROI is the only metric that actually matters.

OK, hopefully, you are now convinced that using just Data Science metrics like Accuracy and Recall are not going to give you the best AI for real-world applications. What you need to develop the right AI is a different tool: Value Matrix.

Value Matrix: The AI Tool for the Real World

Value Matrix was developed by Arijit Sengupta specifically for real-world applications of AI. Value Matrix is a simple tweak to the traditional Confusion Matrix. As the name implies, in the Value Matrix, the UX Designer or Product Manager adds the value of each outcome in dollar terms to the traditional Confusion Matrix. Then the value is multiplied by the count of each outcome in the Confusion Matrix, giving us a clear reading of the overall AI model ROI.

For instance, using the Conservative AI model’s Confusion Matrix above and assuming that the benefit of identifying and preventing a problem successfully is $1,000 and the cost of investigating a potential problem is $100, the corresponding Value Matrix would look like this:

TABLE: Value Matrix for Conservative AI Model assuming TP of $1000 and TN of $100.

Correct guesses are positives (benefit), and wrong guesses are negative (cost). For example, in our current set of assumptions, sending a customer into a repair shop when there is no problem might cost the company $100 (e.g., -$100). Conversely, correctly identifying the outcome where there is no issue generates $100 of savings (e.g., +$100). Correctly identifying a problem (True Positive) saves $1000 (e.g., +$1000), whereas missing the problem costs $1000 (e.g., -$1000).

NOTE: the values of TP/TN and FP/FN outcomes might not necessarily be the same absolute values if our use case was different.

Essentially, Value Matrix is a tool that helps your team recognize that each predictive outcome produces a monetary effect. Value Matrix is exceptionally powerful because it allows us to evaluate the real-world outcomes of deploying different AI models.

Training AI on Real-Life Outcomes to “Think” like a Human

I think by now, it’s obvious that a different value assumption would produce a very different Value Matrix. For instance, if the cost of a False Positive in our use case was higher, say it cost the customer $800 every time they came into the shop, you’d be pretty happy with a Conservative AI model with the highest accuracy, which seeks to not to be wrong:

TABLE: AI Model Selection Based on Real-World Outcomes, Assuming TP of $1,000 and TN of $800

In contrast, if the value of True Positive were greater, say we would save $10,000 each time AI was able to predict a problem, we would want to alert on every possible potential issue, so the Aggressive AI trained on Recall will be better, because such a model seeks to capture every possible True Positive:

TABLE: AI Model Selection Based on Real-World Outcomes, Assuming TP of $10,000 and TN of $100

Note that if we change the values of the outcomes, AI models trained on the opposite goals actually produce a negative ROI!

For example, if the value of True Positive was $10,000 and the True Negative was $100:

Deploying our Conservative, Highly Accurate AI model will LOSE $12,200 vs. generating $105,000 and $175,800 REVENUE if we deploy the other models.

Do you still think purely Data Science metrics like Accuracy have any relevance in the real world?

One More Example

Above, we demonstrated that you can use Value Matrix to focus AI on the real-world outcomes.

Instead of AI asking: “Which event is most likely to be a problem?” AI should instead be asking a business question: “How do I maximize Revenue?” Understanding the AI’s impact in the real world using the ROI instead of the science metrics gives us a handle on training AI to “think” like a human.

As a UX and Product leader, we hope you can easily see a great number of applications of this important principle and the importance of understanding the use case outcomes in great detail. This is one of the key takeaways:

UX research and analysis are essential for helping AI think in more “human” terms. And in this column, we will continue to give you tools to do just that.

We are going to leave you with one of the most spectacular examples of the principle of attaching real-world value to the outcomes. This example is from Arijit Sengupta:

Assume TSA had an AI that was predicting whether someone was a terrorist. If this AI returned FALSE 100% of the time, it would be a highly accurate AI, at 99.9999999999999999% accuracy, because a vast majority of people traveling through a TSA checkpoint are not terrorists.

Such a model would be super-accurate!

It would also be (obviously) super-useless.

On the other hand, if this TSA AI also considered the impact of a terrorist attack (about $1Trillion dollars) vs. the cost of pulling a suspicious person aside for a secondary inspection (maybe 2 minutes of TSA agent’s time, so $1 if they are paid $30/hour), you can see that a very different TSA AI model would emerge. Instead of optimizing for Accuracy, they might want to optimize for Recall, e.g., make the model more aggressive. Much more aggressive… In fact, TSA could pull aside 999,999,999 people (or the entire Earth’s human population of 8 Billion people… 125 times!) and still come out $1 ahead.

Which begs a deeper question:

Why doesn't the TSA do a secondary inspection for every traveler?

The Importance of Human Cost/Benefit

Naturally, I can already hear the chorus of UX designers shouting, "But what about the HUMANS? What about our CUSTOMERS’ ROI?"

You all would be correct, of course. In addition to the business cost/benefit, you should think long and hard about the human/customer cost/benefit.

That is why UX is so essential to creating AI solutions.

Simply put,

AI is just too important to leave it to Data Scientists. Pure Data Science metrics like Accuracy, Precision, and Recall alone don’t create viable real-world solutions. Every real-world AI solution should be tempered by deeper understanding of both the business and human impact it creates. AI is indisputably our collective future – nothing can change that. Understanding how to use this incredible tool for the benefit of humankind and ensuring people in charge do the right thing for humanity and the planet is your job as a UX Designer.

Which brings us to a closing exercise – take a moment to really ponder the question we raised in the previous section:

Why doesn't the TSA do a secondary inspection for every traveler?

Be sure to consider business ROI and human costs, and do your best to quantify each of the four possible outcomes as a UX Designer doing the analysis to train the AI for the real world using the Value Matrix method. Let us know what you came up with in the comments below.

I hope you found this latest installment of our column “UX for AI: Plug me back into the (Value) Matrix” as transformative to your thinking as we have… Ahem, actually, our column is titled “UX for AI: UX Leadership in the Age of Singularity,” and you should hit the [subscribe] button below because you don’t want to miss essential techniques like this one.

See you in the Real World,

References:

Gartner Data and Analytics Summit Showcase March 21, 2019: https://youtu.be/XA2FhDo3hm4

Arijit Sengupta, et. al., Take the AI Challenge, https://aible.com (collected May 8th, 2019)

Accuracy vs. precision vs. recall in machine learning: what's the difference?

https://www.evidentlyai.com/classification-metrics/accuracy-precision-recall#:~:text=Accuracy%20paradox,-There%20is%20a%20downside%20to

Reply