- UX for AI

- Posts

- The Easiest Way to Eat Your AI Data Salami (and Enjoy It)

The Easiest Way to Eat Your AI Data Salami (and Enjoy It)

... Is to slice it thin. Here's how.

A company decides to build an AI-powered system. They identify 20 use cases. They get excited. They try to build for all 20 at once — one big prompt, one big RAG, one big vector database, all of it mashed together.

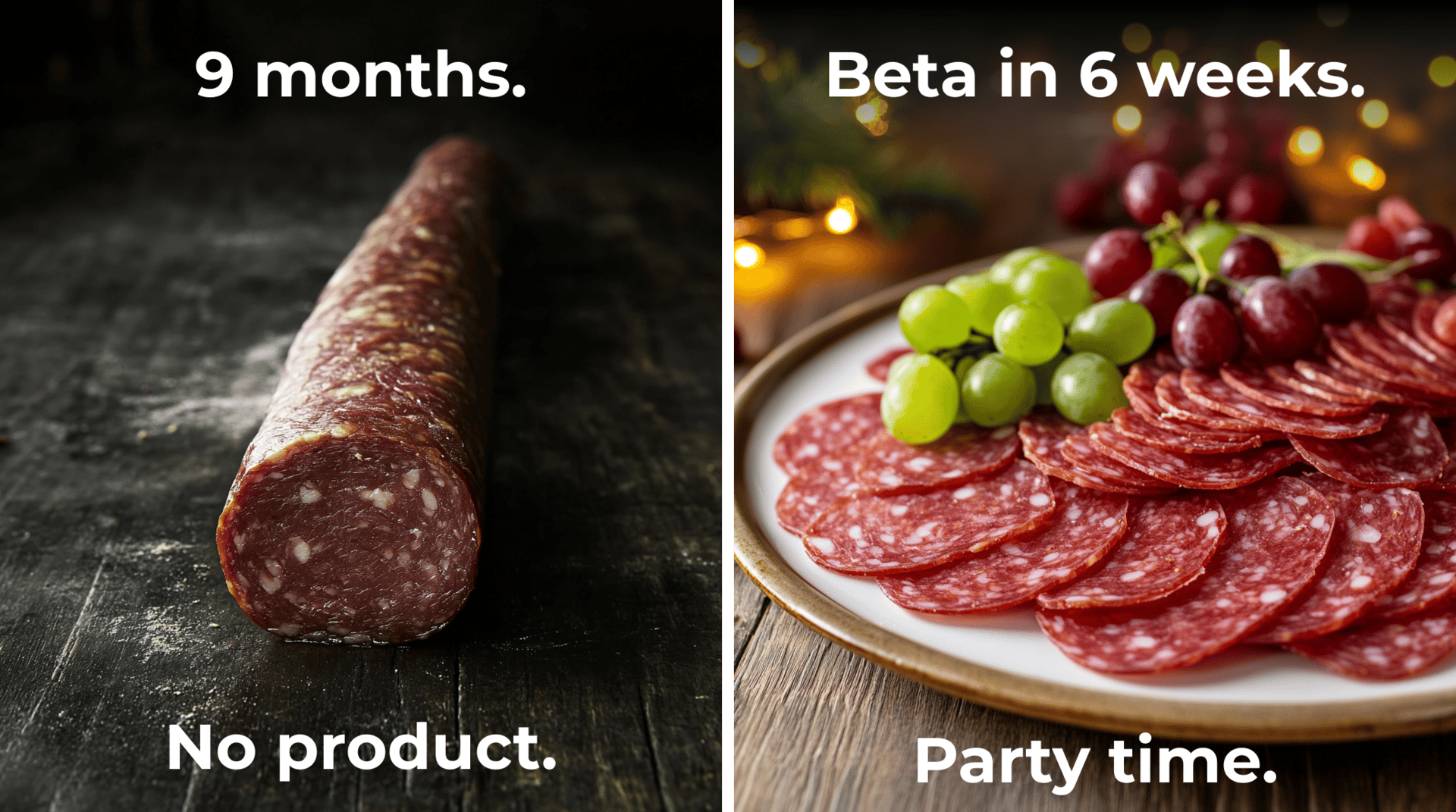

Nine months later: no working product. Three dev teams burned through. Architecture debates that never end. And a board asking uncomfortable questions about where the money went.

They tried to eat the whole salami in one sitting. No knife. Just a room full of people staring at an intimidating stick of cured meat, not sure where to start.

Here's the thing about salami: it's not meant to be eaten whole. It's meant to be sliced. Thin. One piece at a time. You taste it. You decide if you like it. You have another slice. Then another. Before you know it, the whole stick is gone — and you actually enjoyed it.

Even better: invite your friends to the party. Everyone eats a few slices. You serve some Châteauneuf-du-Pape. Now you might need a second salami just to keep up. That's a good problem to have.

This is exactly how I build AI products. And I'm going to show you the exact pattern — using a real system I built and launched recently at a mid-sized cybersecurity company.

Round 1: One Thin Slice

We were building an AI-powered rule generation system for security detection. The SIEM had over 1,000 detection rules across dozens of data sources — CloudTrail, GuardDuty, Route 53, Azure, GCP, and on and on. Writing and maintaining these rules by hand was slow, error-prone, and couldn't keep up with the evolving threat landscape.

Most teams would try to tackle all 1,000 rules at once. Boil the ocean. Eat the whole salami.

Instead, I picked one data source: AWS CloudTrail. Just CloudTrail. About 15 detection rules. Tiny. Fits in the Claude context window without any changes -- and that's the whole point.

I built 15 RAG files that taught the LLM how CloudTrail rules were structured — the detection logic, query syntax, variables, errors, and output format. Small enough to manually inspect every single generated rule. Small enough to test every output by hand. Small enough to optimize the living hell out of it in an afternoon.

Here's the whole system:

Round 1: Thin Slice

That's it. One data source. 15 RAGs. 15 rules.

We validated it internally, then put the "LLM + RAGs" generator in front of customers and told them: this only knows CloudTrail. Nothing else. It won't generate rules for anything else.

No UI. Just LLM + RAGs.

Their response: "Oh my god, these rules are good. This will save me so much time. When can I have this? How much does it cost?"

That's how you know the salami tastes good. One thin slice. And they wanted more.

(I talked about this process in more detail on The Biz & Tech Show with Ahuva Fischer this week — the thin slicing, the thirsty fish problem, a FORTRAN robot from my Gilead Sciences days. Worth a listen if this resonates: https://www.youtube.com/watch?v=f3VyCybxypg)

Round 2: The Router

Now here's where most teams make a critical mistake.

They say, "Great, CloudTrail works! Let's add more!" and they dump everything into the same RAG system. GuardDuty rule structures, Route 53 formats, Azure alert schemas, GCP conventions — all mashed together in one RAG collection.

You have just set up your simple POC for failure.

You're trying to optimize disparate things that were never meant to work together. Now you're struggling with weird hallucinations. Latency. Endless Vector DB infrastructure debates. Managing the context window. Etc. Nine months of whack-a-mole. You took your beautifully sliced salami and shoved all the pieces back into the casing.

Instead, I built a second thin slice. A RAG tuned specifically for GuardDuty rule generation. Optimized independently, on its own terms.

Now I had two slices. Both generated high-quality rules on their own. The question became: how does the system know which RAG to use?

I added a router.

Round 2: Add Router

The router's job is dead simple. A CloudTrail rule request is a CloudTrail rule request. A GuardDuty rule request is a GuardDuty rule request. The data source tells you which RAG to use. The router reads the label and sends it to the specialist that's already been tuned for exactly that format.

No ambiguity. No judgment calls. Clean boundaries.

Why the Router is the Whole Game

Without a router, you have one monolithic system trying to generate rules for every data source at once. That's the whole salami with no knife.

With a router, each RAG is a specialist. It only knows one data source's rule structure, and it knows it deeply. Hallucination drops dramatically because you're not asking the LLM to hold 20 different rule formats in its head at once. You're asking it to generate one type of rule, using a RAG specifically tuned for that one format.

And this scales almost infinitely.

Round N: Scaled System

Give 10 teams 10 different data sources each. Now you have 100 specialized RAGs, all routing through the same classifier. The router doesn't care if there are 2 RAGs behind it or 200. As long as it can classify the data source — which is trivial, because the request usually tells you what it is (and you can put in a simple question-response to elucidate this from the user if the classification is ambiguous) — it routes to the right specialist RAG every time.

You scale horizontally. By adding thin slices, not by making the salami bigger.

The Registry: How 15 RAGs Became 1,000 Rules

Once CloudTrail was dialed in — really working, security engineers throwing money at us — I did something that changed the trajectory of the whole project.

I extracted the common patterns into a RAG Registry.

Every RAG file I built for CloudTrail had a shared structure: how rules were formatted, how severity was classified, how the detection logic was expressed, and how the output was structured. I pulled those common pieces out into a reusable template — a registry that captured what "good" looked like.

Then I pointed the LLM at the registry, added a few sample rules, and prompted: "Here's what a great CloudTrail RAG looks like. Now build me one for GuardDuty."

It did. And it was 80% right on the first pass. A few hours of tuning and it was production-ready.

Then Route 53. Then Azure. Then GCP.

15 RAGs became 1,000 rules in 2 weeks. And a Beta AI Product in just 6 weeks.

Not because I worked 20-hour days. Because the registry taught the LLM what quality looked like, and each new RAG was just another slice off the same salami. Same pattern. Same structure. Different data.

The snowball was rolling.

Same Knife, Different Salami

This isn't just cybersecurity. The same pattern works anywhere the system can cleanly identify an atomic use case.

→ Insurance: I worked with a startup in Germany on exactly this. We started with pet insurance that only covered dogs. Just dogs. One thin slice — breed-specific conditions, coverage rules, claim patterns. We tuned it until it worked beautifully. Then we scaled to cats, birds, other pets. Then other types of insurance entirely. The router classifies by policy type, and a dog policy is always a dog policy. Clean boundaries.

→ Marketing: I built an AI-powered campaign generation system for a large media company. We started with one thin slice: email campaigns. Just email. One RAG tuned to subject lines, body copy, segmentation logic, and CTA patterns. Once email was dialed in, we scaled to social campaigns, then event promotions, then video copy. The router classifies by campaign type — and an email campaign is always an email campaign.

→ Edutech: One of the EU's largest online universities had spent three years building a chatbot powered by one massive combined RAG — course materials, exams, scheduling, everything mashed together. Students rejected it. I advised the leadership to split it. One RAG per course. 700 courses, 700 specialized RAGs. Sub-RAGs for specific units and exams as needed.

But here's the twist — we didn't need a router at all. We built a simple information architecture: a course catalog search system and "courses you're taking" navigation. When a student clicks into Organic Chemistry, the system already knows which RAG to load. The student did the routing with a single click.

You don't always need the router. You just need the router concept. Don't over-engineer something you can solve with good information design.

The Pattern

Slice thin. Build the RAG. Add the router. Extract the registry. Invite friends to the party.

And that's how 15 RAGs become 1,000 rules in 2 weeks.

And a Beta AI product launch in 6 weeks.

See You at RSA

I’ll be at RSA in March. If you are attending, email me. Let’s grab a quick coffee. In 15 minutes, I’ll tell you what I see working, and we can strategize how it might help your AI Product challenges.

You have the salami. I'll bring the knife. And together, we'll have a party to remember.

Greg

FAQ

"If this is a standard technique, why don't more people use it?"

It takes work. Real work. Not the "let me fine-tune a model" kind of work that AI engineers and Data Scientists love to do — the "let me sit with a security engineer for three hours and understand exactly how they triage a CloudTrail alert" kind of work. Breaking things into thin slices requires a deeper, more comprehensive understanding of how your customers actually do their work. And it takes translating that understanding into a technical application.

I built my Snowball Sprint Framework as a systematic way to bring together technology, business, and customer. I've been honing this for 16 years across 34 AI-driven products. The pattern is not complicated, but it is sophisticated. The key is starting with the customer and the business, which is the alpha and the omega of the whole thing. Technology is just an enabler. You need to understand the technology, but framing the use case correctly is the key.

"How does the router actually work?"

Most of the time, the classifier is simply another LLM specifically set up to evaluate the request. It has its own RAG file, but it's much more specialized — only focused on returning the bucket you're targeting. You can try it yourself in about five minutes, but it may take a few hours to really get it working the way you want. Which is exactly why Round 1 has no router at all. And no UI. It's a much simpler system that assumes a single bucket, a single use case. It focuses on getting the AI output right first. Add the router when you need it.

"Is this a proprietary technique?"

Not even close. The idea of routing inputs to specialized experts dates back to Jacobs, Jordan, Nowlan & Hinton's 1991 paper "Adaptive Mixtures of Local Experts" in Neural Computation. Google Brain scaled it in Shazeer et al.'s 2017 "Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer" at ICLR — the foundation of every modern Mixture-of-Experts architecture. RAG itself was published by Lewis et al. at NeurIPS in 2020. What I'm giving you here is a practical breakdown of how to combine well-established patterns and actually ship product with them.

See you at RSA.

Reply